# [A Visual Guide to Mamba](https://newsletter.maartengrootendorst.com/p/a-visual-guide-to-mamba-and-state) (An "Alternative" Chapter 3)

Instead of extending Chapter 3, let's "replace" it! Or more accurately, let's provide an alternative.

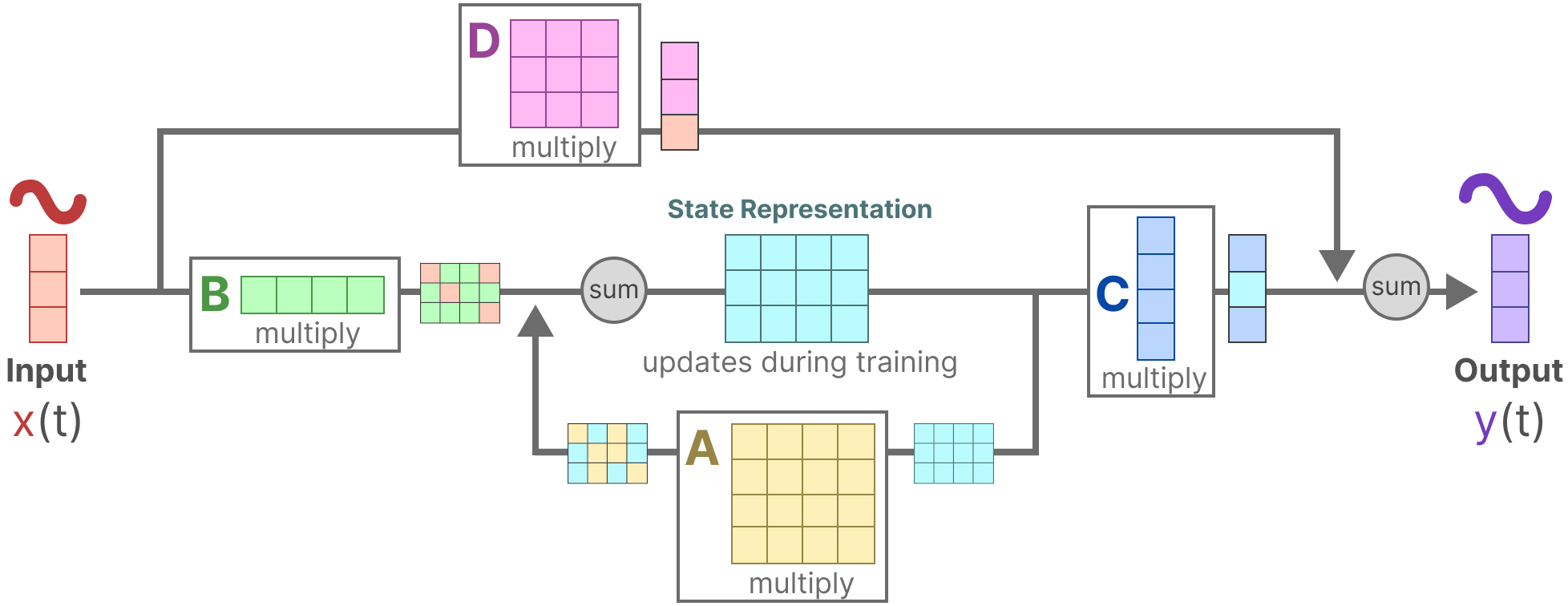

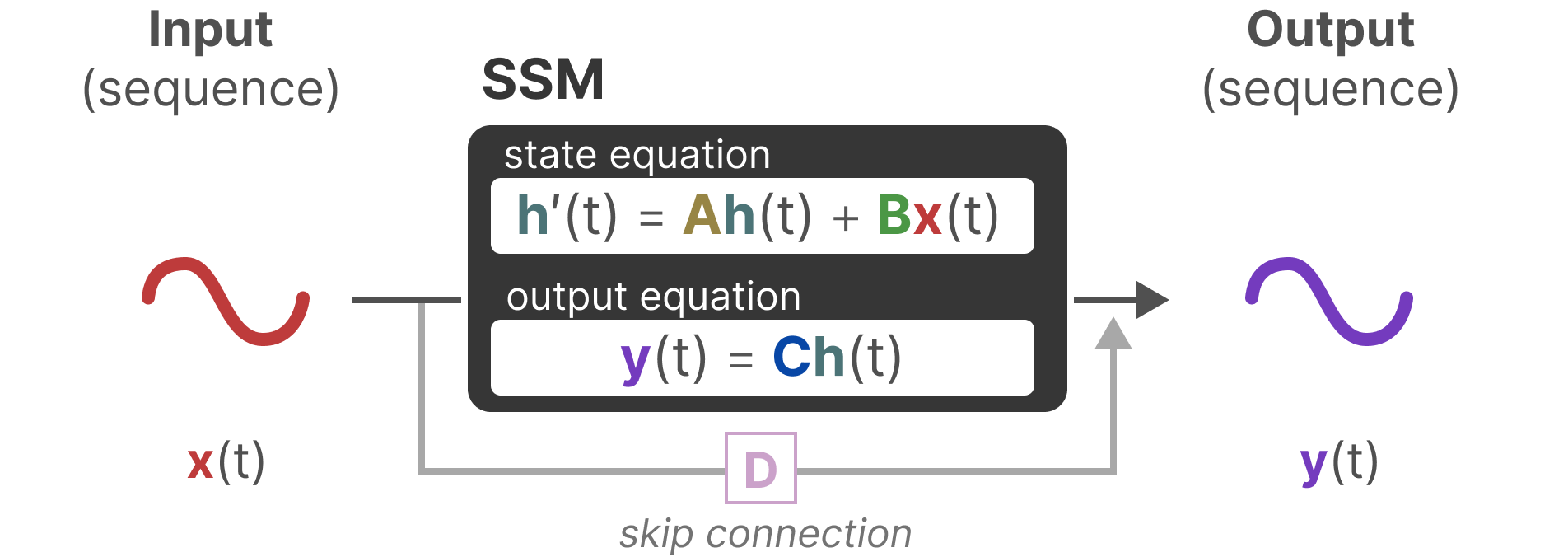

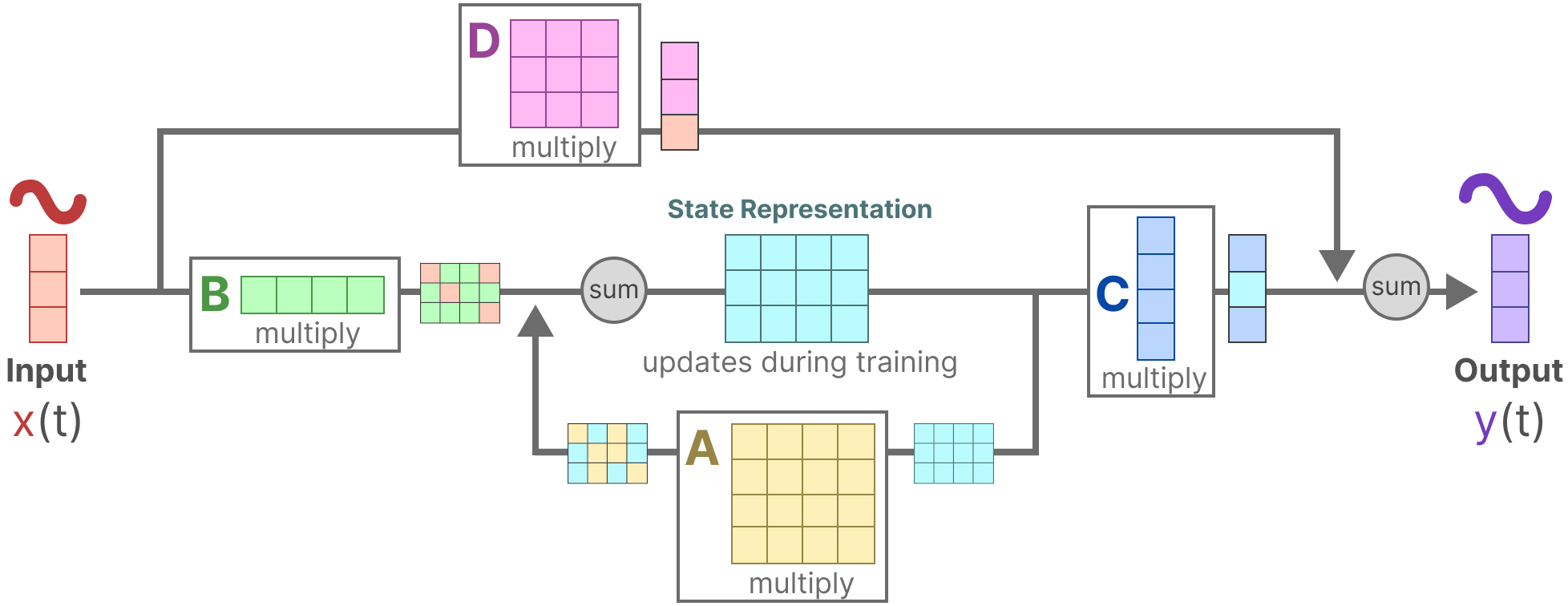

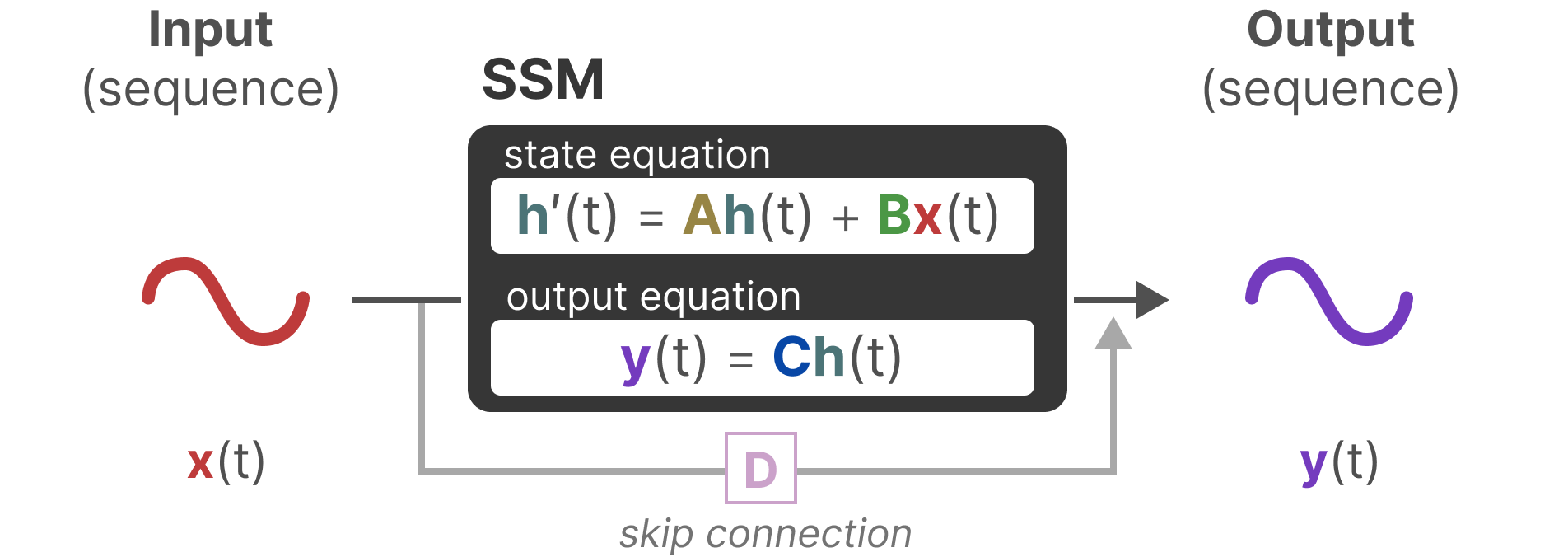

[A Visual Guide to Mamba and State Space Models](https://newsletter.maartengrootendorst.com/p/a-visual-guide-to-mamba-and-state) provides an exciting alternative to the transformer architecture discussed in Chapter 3. While the chapter focuses on decoder-based transformer models, this blog introduces readers to a fundamentally different approach to sequence modeling.

By exploring this alternative architecture, the blog complements Chapter 3 by showing you there is more to this field than transformers. Exciting hybrid architectures even start [combining Transformer- and Mamba blocks](https://www.ai21.com/blog/announcing-jamba).

By exploring this alternative architecture, the blog complements Chapter 3 by showing you there is more to this field than transformers. Exciting hybrid architectures even start [combining Transformer- and Mamba blocks](https://www.ai21.com/blog/announcing-jamba).

By exploring this alternative architecture, the blog complements Chapter 3 by showing you there is more to this field than transformers. Exciting hybrid architectures even start [combining Transformer- and Mamba blocks](https://www.ai21.com/blog/announcing-jamba).

By exploring this alternative architecture, the blog complements Chapter 3 by showing you there is more to this field than transformers. Exciting hybrid architectures even start [combining Transformer- and Mamba blocks](https://www.ai21.com/blog/announcing-jamba).