# [The Illustrated Stable Diffusion](https://jalammar.github.io/illustrated-stable-diffusion/) (Extends Chapters 9)

In Chapter 9, we cover the Vision Transformer (ViT) and explore how text and images can be modeled together through CLIP. We showcase how CLIP can be used to bring images to textual models, allowing them to "reason" about images as well as text.

But CLIP can also be used for the opposite, bringing text to vision models, allowing to use the input text as guidance for the images they create.

[The Illustrated Stable Diffusion](https://jalammar.github.io/illustrated-stable-diffusion/) explores the role of CLIP in stable diffusion which it does in the highly visual way that you can expect having read the book.

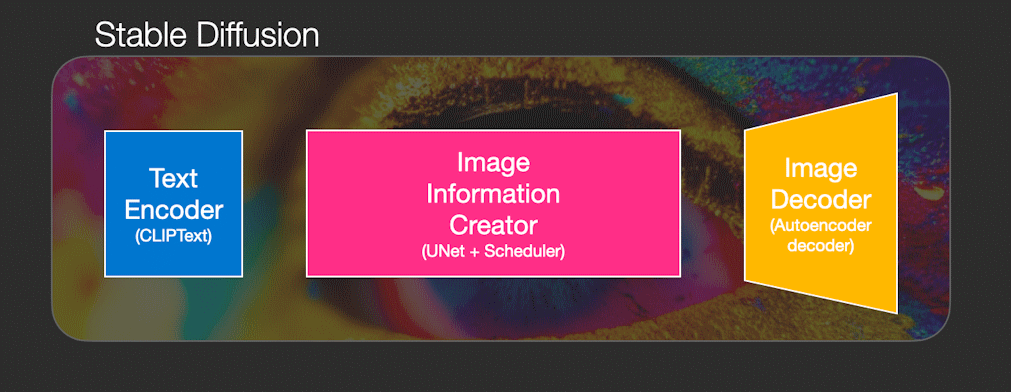

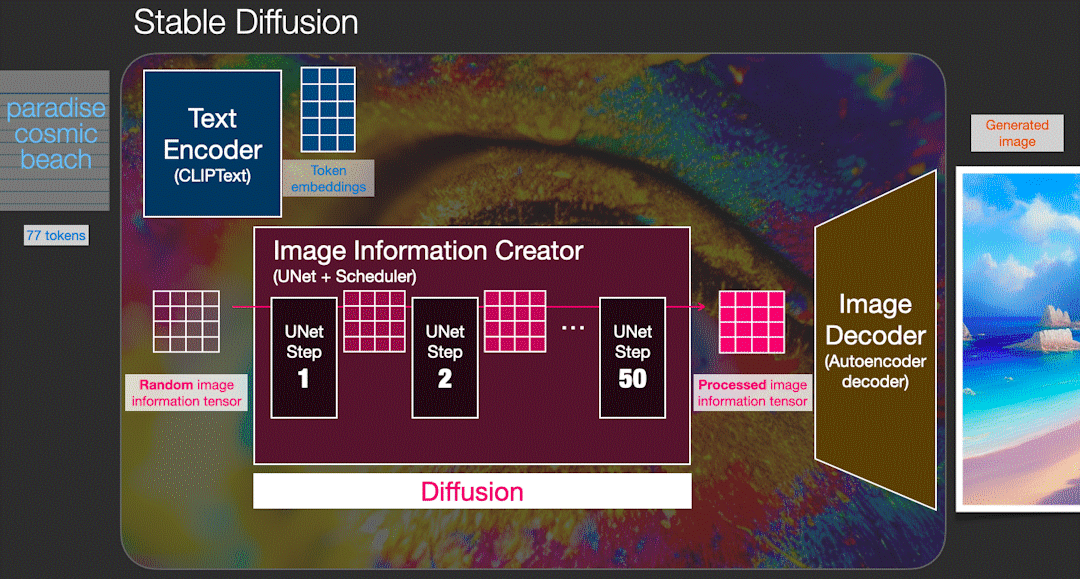

It further illustrates stable diffusion's inner working, showing how it combines CLIP's capabilities with advanced diffusion models to create high-quality images from text prompts.

It further illustrates stable diffusion's inner working, showing how it combines CLIP's capabilities with advanced diffusion models to create high-quality images from text prompts.

The book focuses mostly on generating text but the process of generating images has significant overlap. The illustrated guide complements Chapter 9 well as more architectures, technologies and methods enter different domains and modalities.

The book focuses mostly on generating text but the process of generating images has significant overlap. The illustrated guide complements Chapter 9 well as more architectures, technologies and methods enter different domains and modalities.

It further illustrates stable diffusion's inner working, showing how it combines CLIP's capabilities with advanced diffusion models to create high-quality images from text prompts.

It further illustrates stable diffusion's inner working, showing how it combines CLIP's capabilities with advanced diffusion models to create high-quality images from text prompts.

The book focuses mostly on generating text but the process of generating images has significant overlap. The illustrated guide complements Chapter 9 well as more architectures, technologies and methods enter different domains and modalities.

The book focuses mostly on generating text but the process of generating images has significant overlap. The illustrated guide complements Chapter 9 well as more architectures, technologies and methods enter different domains and modalities.